Information Theory and Coding > Entropy > What is Chain rule of Mutual Information?

Chain Rule for Mutual Information

Conditional mutual information of random variables X given Y defined by

I (X; Y ) = H (X ) − H (X|Y) = H (Y ) − H (Y|X)

Conditional mutual information of random variables X and Y given Z is defined by

I (X; Y|Z) = H (X|Z) − H (X|Y,Z)

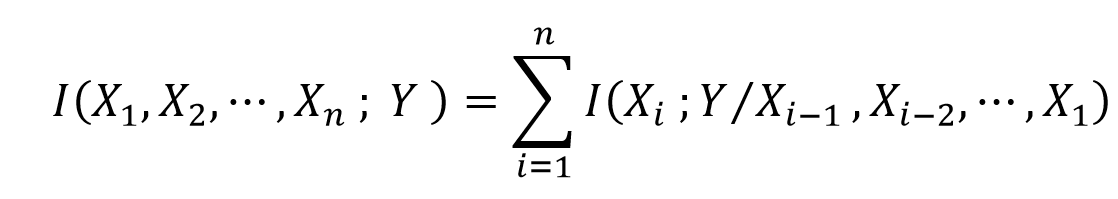

Conditional mutual information of random variables X1 , X2 X3 ….. Xn and Y is defined by

Feedback

ABOUT

Statlearner

Statlearner STUDY

Statlearner