Information Theory and Coding > Entropy > Chain rule for Entropy

Chain rule for Entropy

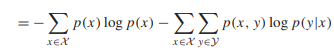

We know that the naturalness of the definition of joint entropy and conditional entropy is exhibited by the fact that entropy of a pair of random variables is the entropy of one plus the conditional entropy of the other. i.e

| H (X, Y ) = H (X) + H (Y|X) |  |

Alternatively we can write H (X1, X2 ) = H (X1) + H (X2|X1) . This can be extend for X1, X2, X3 as

H (X1, X2, X3) = H (X1) + H (X2|X1) + H (X3|X1, X2)

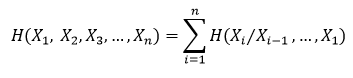

Similarly for X1, X2, X3 …. Xn joint entropy is

Subaditivity rule

This rule says that conditioning entropy can only reduce the entropy. However conditioning on a specific realization of B does not necessarily reduce entropy

i.e. H(A|B) = H(AB) − H(B) ≤ H(A) + H(B) − H(B) ≤ H(A)

or H(A|B) = ≤ H(A)

Feedback

ABOUT

Statlearner

Statlearner STUDY

Statlearner